A Country of Trolls

ABOUT FIVE YEARS ago, the Indian Government was busy looking for a pool of teenaged hackers to fight off cyber offensives targeted at its strategic installations, including the national power grid, gas pipelines and other public utilities. The reason that Richard Jeffrey Danzig, cyber security adviser to former US President Barack Obama, offered to Open about the need to hire young adults and teenagers in similar operations was that there was 'a generation gap' in understanding anything cyber. This former secretary of the US Navy under President Bill Clinton referred to the senior leaders of cyber security programmes as 'digital immigrants', those who came to this new realm late in life. Then there are 'digital natives', the young ones who believe that if you can imagine something, you can create it. 'All countries would be well-advised to open channels for recruiting such younger people, including those who may not be traditionally trained,' Danzig had explained to Open, emphasising the need to co-opt young adults and teenagers to the cause of enhancing a country's cyber security prowess ('The Nation Wants the Smart Hacker', August 4th, 2014).

Late last month, the Oxford Internet Institute (OII), whose website calls itself 'a multidisciplinary research and teaching department of the University of Oxford, dedicated to the social science of the Internet', identified India along with six other countries, including China, for an altogether different reason, and not exactly for building a cyber defence wing, often called the fourth arm of the armed forces. It was, instead, for influencing foreign public opinion, primarily over Facebook and Twitter, through cyber troop activities. Besides China and India, the others were Iran, Pakistan, Russia, Saudi Arabia and Venezuela.

Mumbai-based cyber security specialist Ritesh Bhatia, whose company, V4web, offers solutions to fight cyber strikes on the Government as well as the private sector, laughs, "I am not one bit surprised about this development. It has been along expected lines." Three others who asked not to be named but are involved in ethical hacking, two of them based out of Delhi and another in Hyderabad, contend that the extent of use of social media for political propaganda has been very high in India. They also suggest that Indian players in the field of manipulative propaganda, also called computational propaganda, even offer training to recruits from other countries. In fact, OII has stated that Sri Lankan trolls received training in India. That major companies such as political consultancy Cambridge Analytica, IT giant Silver Touch and so on were involved in political campaigns in India are proof of the country's growing capacity to influence perceptions locally and globally, they concede.

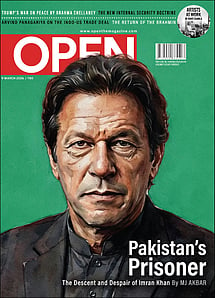

Imran Khan: Pakistan’s Prisoner

27 Feb 2026 - Vol 04 | Issue 60

The descent and despair of Imran Khan

In March 2018, it was data scientist Christopher Wylie who blew the whistle on Cambridge Analytica, which handled the Donald Trump campaign. He disclosed that Cambridge Analytica managed to illegally get Facebook information of 87 million people and used such data to cook up narratives on social media to drum up White supremacist sentiments and offer them legitimacy in the US. Computational propaganda, according to the definition given by co-author of the OII report, Samantha Bradshaw, uses three elements—automation, algorithms and Big Data—to enhance propaganda for the digital age to influence people based on their ideas, values and beliefs. In 2017, OII found 28 countries to be running troll farms; in 2018 the figure went up to 48; and in 2019, they found 70.

The comprehensive report lays bare propaganda unleashed by political parties of all hues to stay ahead of the propaganda race. In a write-up, Bradshaw cites a wave of online misinformation that followed the February 14th, 2019 terrorist attack by Jaish-e-Mohammed in Pulwama district of Kashmir that killed at least 40 personnel. The disinformation centred on a doctored photo of then Congress president Rahul Gandhi standing next to the suicide bomber, an image that was debunked as fake news by fact-checking site Boomlive.in. The report says that the Hindi text accompanying the photo insinuated that the Congress was party to the crime. The OII report adds, 'The geopolitical incident prompted major concerns, as doctored, misleading and outdated images and videos circulated on social media platforms and reached millions of additional viewers through mainstream media. This could not have come at a more sensitive time: India's 900 million eligible voters—including 340 million Facebook users and 230 million WhatsApp users—will take part in India's general election, the largest exercise of democracy in history.'

The OII report also talks about proof of 'formal and informal knowledge diffusion' happening across geographic lines. It notes, 'For example, during the investigations into cyber troop activity in Myanmar, evidence emerged that military officials were trained by Russian operatives on how to use social media… Similarly, cyber troops in Sri Lanka received formal training in India… Leaked emails also showed evidence of the Information Network Agency in Ethiopia sending staff members to receive formal training in China… While there are many gaps in how knowledge and skills in computational propaganda are diffusing globally, this is also an important area to watch for future research and journalistic inquiry.'

The new OII report, titled The Global Disinformation Order: 2019 Global Inventory of Organised Social Media Manipulation, co-authored by Philip Howard, Director, OII, and Bradshaw, Researcher at OII, warns that the 'cyber troops' punished for their excesses in manipulating perceptions through social media abroad by Facebook and Twitter do not capture the gravity of the situation. 'Yet,' the report says, 'we can confidently begin to build a picture of this highly secretive phenomenon.' Foreign-influence operations are an important area of concern, the report says, emphasising that attributing computational propaganda to foreign state actors still remains a challenge. The report notes that Facebook and Twitter have begun publishing limited information about 'influence operations' on their platforms in the seven countries mentioned earlier—which include India and China. Mails sent to the Indian foreign secretary for comment on these charges remained unanswered.

Sangeeta Mahapatra, Research Associate at Hamburg-based German Institute of Global and Area Studies, who monitors social-media campaigns in India and elsewhere closely, offers a cautious response: 'Like psych-ops during wartime, disinformation has emerged as a tactic of narrative and thought control during peacetime. While in form and function, it is nothing new, in size, it is now staggering because social media and messaging services have made it easier to reach the targeted audience. The solution is in the problem—make it difficult to reach the audience. Disinformation has not been disincentivised by any state; if many states are resorting to it then it is an unacknowledged but normal method of power enhancement by them.' She hastens to add that in India and China, the focus of disinformation actors is primarily on the domestic audience. 'They would presumably venture into foreign influence operations when they feel the stakes are high enough. For China, the Hong Kong protests of 2019 may have increased the stakes and for India, it may well be the Kashmir issue. But there is no substantial evidence as such of India's capacity for impactful foreign influence campaigns. As platforms are increasingly setting up control mechanisms to prevent outside interference for 'track and target' operations of foreign disinformation actors, foreign state actors need domestic actors in the targeted state to lend scale to their campaigns. For example, as Facebook has been taking down fake pages created by organised disinformation actors, they are now paying non-state actors to use their personal pages to spread disinformation,' she points out. According to her, their other tactics include fishwrapping, using deepfakes and cheapfakes, false memes, and slanted/spliced videos and promoting these through more human sources as bots are being increasingly detected. Bots are codes that mimic human behaviour.

THE OII REPORT, meanwhile, makes a scathing attack on the countries that use troll farms. It says, 'In 45 out of the 70 countries we analysed, we found evidence of political parties or politicians running for office who have used the tools and techniques of computational propaganda during elections. Here, we counted instances of politicians amassing fake followers, such as Mitt Romney in the United States, Tony Abbott in Australia, or Geert Wilders in the Netherlands. We also counted instances of parties using advertising to target voters with manipulated media, such as in India, or instances of illegal micro-targeting such as the use of the firm Cambridge Analytica in the UK Brexit referendum by Vote Leave. Finally, we further counted instances of political parties purposively spreading or amplifying disinformation on social networks, such as the WhatsApp campaigns in Brazil, India and Nigeria.'

According to OII, Indian trolls use bots and human accounts more compared with several other countries—such as Iran—that also use cyborgs and stolen accounts to further their agenda. OII lists India among countries with medium cyber troll capacity—meaning those that have teams with a much more consistent form and strategy (compared with other categories such as minimal and low groups), involving fulltime staff members who are employed year-round to control the information space. The fourth and the final category is high cyber troop capacity. Countries on that list are China, Egypt, Iran, Israel, Myanmar, Russia, Saudi Arabia, Syria, the United Arab Emirates, Venezuela, Vietnam and the US. The report says: 'These teams do not only operate during elections but involve full-time staff dedicated to shaping the information space. High-capacity cyber troop teams focus on foreign and domestic operations.' Despite the temporary nature of hires of trolls, in India, the report notes, 'multiple teams ranging in size from 50-300 people [are] used for social media manipulation.' Indian operations involve multiple contracts and advertising expenditures are valued at over $1.4 million, it states. Unlike Iran, Israel, China and other countries, the OII report doesn't blame Government agencies directly for running troll farms in India. The players listed in it are 'politicians and parties; private contractors; civil society organisations; and citizens'. The categories of campaigns that troll farms are involved in, in India, do not include suppression of dissent, but they fall under 'support' for the Government, attacking the opposition, and driving divisions.

A note by Ualan Campbell-Smith and Bradshaw of OII says that external interference in India has been minimal. 'The deliberate spread of disinformation by politicians and political parties has often led to misinformation—the accidental spread of false content—as a result of hyper-connectivity and digital illiteracy. However, there have been a few cases of foreign interference by countries such as Pakistan who set up a series of fake accounts and Facebook pages about issues to do with India's general election. Following the Kashmir attack, individuals linked to the Pakistan Army used Facebook and Instagram accounts to inflame tensions with India and push claims over Kashmir,' it says.

The Global Cyber Troops Inventory of OII, the report says, uses a three-pronged methodological approach to identifying instances of social media manipulation by government actors. This involves: 1) a content analysis of news article reporting; 2) a secondary literature review; and 3) expert consultations. The OII report also says that social-media manipulation in India has gone up in 2019 compared with 2017. The note by Campbell-Smith and Bradshaw explains, 'Political parties are now working with a wider-range of actors, including private firms, volunteer networks, and social-media influencers to shape public opinion over social media. At the same time, more sophisticated and innovative tools are being used to target, tailor, and refine messaging strategies including data analytics, targeted advertisements, and automation on platforms such as WhatsApp.'

At a time when hacking ATMs and intercepting high-speed transactions are losing their appeal for new-age hackers, officials are worried about the capacity that cyberspace offers to those who recklessly follow political propaganda. WIRED magazine reported this August that hackers are 'now able to take control of the speakers in your house to launch aural barrages at high volume that can harm human hearing, or even have psychological affects'. The incentive to join troll farms is not just the satisfaction of seeing an upper hand for a political party they are attached to, says an official close to the matter. He says that fake news offers financial incentives and some people derive fun seeing "virality turning violent".

Take Christopher Blair, whom BBC called the 'Godfather of fake news', a resident of Portland, US, who made news some years ago with his rabid comments on social media that went viral. Here is a dose of one of his posts, 'BREAKING: Clinton Foundation Ship Seized at Port of Baltimore Carrying Drugs, Guns and Sex Slaves.' By the time he was called out for his excesses, he had earned a lot of money through ads on his websites.

AS A RESULT OF campaigns to spot such mischief, social-media platforms and messaging services apps have begun to crack down on wrongdoers for a while now. According to a report, Facebook has launched a feature that allows its Instagram users to flag stories and posts to fact-checkers to check for fake content. Yet, monitoring vicious content remains a Herculean task. In India, for instance, WhatsApp had come under fire over incidents of lynching after images were shared with others through the platform of people branded as cow smugglers. As cow vigilantes went on a crime spree, WhatsApp was forced to put in place controls to make mass sharing of dangerous content through its communication app cumbersome; it had to put out appeals across TV, radio and print, asking people to help combat hoaxes. Besides setting up an Indian arm of the company and appointing a grievance officer, WhatsApp had also set a limit on forwarding messages. The Indian experiment was later replicated globally ('How WhatsApp Is Changing Indian Politics', January 28th, 2019). WhatsApp, like Facebook, has tied up with fact-checkers to trace fake content. However, tracking inflammatory messages at hyper-local levels is tough at a time when internet and mobile phone penetration in India is rising at a fast clip.

From hyper-local to global, Indian troll farms seem to wield enormous influence. Reports like the one by the OII serve as a reminder of the rot that has set in. Yet, containing the menace is easier said than done. Whoever said technology is neutral may have got it wrong: it is only as neutral or biased as its users.