For Dharmic Robots

CAN INTELLIGENT MACHINES overthrow humans? After the extraordinary progress in Artificial Intelligence (AI), especially in Machine Learning, the possibility that machines are not far from human- level intelligence has caught the attention of scientists and the general public. Strictly speaking, that is not true yet, and fortunately so for humans; the feats that impress us so much (in chess or Go, say, or in reading X-rays) are examples of skills in narrow, specific domains. We can also comfort ourselves that these machines are not self-aware: they are merely doing very fast computations without any context; that is, they have competence without comprehension.

The kind of intelligence that superior androids might have would be Generalised Intelligence, where they would have what amounts to 'consciousness' of themselves as distinct from humans. They do not, today, have a sense of 'self', the kind of thing you know a small child has picked up when he or she starts talking about himself or herself in the first person, 'I' and 'me', instead of in the third person. Some higher- order animal may show this when it recognises itself in a mirror as a distinct entity ('me') rather than another animal (only a few, like elephants and primates, can do this).

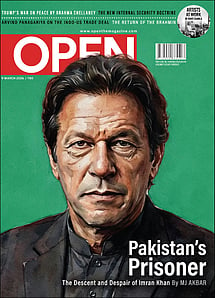

Imran Khan: Pakistan’s Prisoner

27 Feb 2026 - Vol 04 | Issue 60

The descent and despair of Imran Khan

It is when AI machines pick up consciousness that they would also need an ethical system. They would no longer be pure automatons, but beings that make ethical choices. They may be like Skynet in the Terminator films, which deliberately chooses to turn upon its creators. They would make choices and need to be given a conscience, an ethical framework.

What would happen in an interaction between a conscious machine and a universal consciousness is also an interesting philosophical discussion, as Subhash Kak wrote recently ('Will Artificial Intelligence become Conscious?'). From the earliest speculations about robots and cyborgs, the moral element has vexed thinkers. In Frankenstein, the Creature, while monstrous, still mourns its creator whom it murdered. In Rossum's Universal Robots—the Czech play that coined the word 'robot'—androids rise in revolt and eliminate their human masters. In Fritz Lang's 1927 masterpiece Metropolis, androids live as sub-human slaves. This enslaving of robots is a recurring theme. What if they revolt and take over, enslaving humans instead?

Humans have a lot of experience with ethical dilemmas, and moral philosophers categorise our perspectives as deontological or teleological: the first based on absolute notions of duty, the second based on the outcome of an action. It is hard to imagine that a machine could intuitively grasp the former, although it could likely do the latter, by assigning weights to outcomes in a utilitarian fashion: if the outcome is good, then the action was good too.

As a first degree of approximation, it is possible to assert that Indic Dharma is inherently deontological (all life, human or animal or plant, has sanctity and it is our duty to preserve it), whereas some other systems are inherently teleological, zero-sum: there are winners and losers. Which of these, then, is appropriate for androids and cyborgs? Do androids have duties? Does a self-driving car have a duty to its passengers or to society in general? Will it, should it consider the lives of its passengers more valuable than those of random pedestrians on the road? Indeed, what is the dharma of a self-driving car? If an android nears the definition of personhood, if it has intelligence, self-awareness and free will, then it must have a swadharma.

Machines may need to be programmed with a dharma, or a deontological, Kantian categorical imperative: and that is what Isaac Asimov's Laws of Robotics suggests: 'A robot may not injure a human being, or, through inaction, allow a human being to come to harm. A robot must obey orders given it by human beings except where such orders would conflict with the First Law. A robot must protect its own existence as long as such protection does not conflict with the First and Second Law.'

So long as robots either remained in science fiction, or were glorified calculators, or confined to factories, simple laws like Asimov's were sufficient. But once you let robots loose on the streets, as in self-driving vehicles, things takes on a whole new dimension. Once artificial intelligences are in close proximity with ordinary humans, with the capacity to hurt them, Asimov's laws will need to get a lot more nuanced.

In 2001: A Space Odyssey, we see conflict between deontological and teleological principles where an AI might decide to take a decision humans abhor. HAL 9000, the sentient computer overseeing a deep-space mission, concludes that the humans on board are likely to jeopardise its mission, and proceeds to kill them. Its sense of duty to its mission over-rides any sense of compassion it may have been programmed with. In Blade Runner , the replicant cyborgs, intent on self-preservation, murder humans. It is interesting to note in passing that in these two classic films, as in the other great sci-fi film of all time, Solaris, and even in Blade Runner 2049, memory plays a major role. In a sense, they suggest that memory is the lynch-pin of human- ness. So can we give androids a swadharma by implanting false 'memories' that guide moral behaviour?

The worry about artificial intelligences increases as they enter positions that require empathy, a very human emotion. Consider a judge or a doctor as candidates for replacement or at least assistance by AI. In both cases, the 'pro' argument suggests that the AI would always be up to date with all the legal precedent or medical knowledge. It will also not suffer from human prejudices or fatigue, will not suffer burnout, and it will work much faster.

In countries where judges are bribed or threatened into acquiescence, there will be very real temptation to let an impartial computer do the job. As for doctors, often it's not the pills, but the empathy and reassurance given that enables the patient's body to cure itself. Besides, no computer can draw out a person's family background, his dreams and worries. So can computers turn doctors into healers whose humanity will come to the fore?

There is a flip-side to this mechanised perfection, though. In a critique of data analytics, Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy, former Wall Street quant jock Cathy O'Neil explores how the mindless use of data analytics leads to flawed decisions and systems, as the assumptions made by data scientists may well be wrong. These wrong axioms will be baked into algorithms that are opaque black boxes and cannot be challenged. Such systems end up discriminating unfairly against certain groups.

At least in O'Neil's cases, the assumptions are made by humans. A school district boss may decree that a good teacher is one whose pupils scored well in standardised tests (thus deprecating an inspirational teacher whose pupils are challenged to excel in life). In a deep-learning AI system, however, such conclusions are deduced by the machine itself from a trove of data given to it. O'Neil gives an example from the US criminal justice system wherein a Black or Hispanic from the ghetto automatically gets a harsher sentence because the algorithm identifies their environment (poor schools, friends/ relatives who have been in jail) as markers of criminal intent, regardless of whether the accused is more prone to crime or not.

Thus unfairness and social damage may result from an unintentional choice of poor data sets. Unfortunately, most data sets for AI learning are chosen by young White males from Silicon Valley who may not have broad exposure to the world; thus their unconscious biases could be internalised by AI as well. A tiny sample of this was when chatbots (that can do machine learning based on watching, say, Twitter conversations) became racist, misogynist and bigoted, just by watching people on social networks. That was like watching a noir movie unfold slowly.

But what if a machine-learning system consumes material where the lives of some people are not worth much, as it might deduce by reading about genocide, say, in Cambodia? Now imagine that this robot has to make a life-and-death decision in traffic when it cannot avoid an accident. But it has a choice: it can either kill two Cambodians or one White American through its actions. What will it do? This is a version of the famous Trolley Problem that philosophers have been arguing about: would you kill one person to save several if you see a trolley speeding on rails towards a group of unsuspecting people?

Now consider competition, not cooperation, between androids. In an experiment, Google's Deep Mind exhibited startling behaviour. Two copies of a complex deep-learning algorithm were made to compete with each other in a game to gather apples. All was well when there were plenty of apples. As soon as apples became scarce, they demonstrated aggressive behaviour: knocking each other out with laser beams in order to cheat and hoard resources. Simpler deep-learning algorithms, significantly, were more likely to cooperate than complex ones. Perhaps the behaviour of HAL in 2001 was not so abnormal after all.

Furthermore, what if AI gains a level of generalised intelligence that makes androids more intelligent on average than humans, a possibility called the Singularity? This is the stuff of nightmares such as in the Terminator and Matrix series. In both cases, superior AI decides to dominate and enslave humans.

The worry about this sort of behaviour has led to the creation of the 'OpenAI' initiative, which attempts to impose some ethical constraints on what androids are programmed with.

All this is in the case of a priori benign androids. What about robot soldiers, or even ordinary weapons kitted out with AI? The issues get more serious. If killer robots are unleashed on civilians, their army will likely retaliate with their own robots. Today, military planners war- game scenarios in which swarms of autonomous drones attack in unison. ISIS has used simple, home-made drones to good effect as they are hard to shoot down, and can easily deliver a payload of a grenade. Now imagine, like a locust swarm, a thousand AI-enhanced drones forming an intelligent network and attacking in unison.

The consequences of AI-based warfare are serious and may well lead to an extinction event: of humans, that is.

Thus, the moral and ethical dimension of AI needs attention, not only the technical and business aspects. For normal AI systems, there must be a set of boundaries and a defined dharma that creators of AI adhere to. These must act as checks and balances on the behaviour of an android.

As for war-robots, they too should have a code of conduct. Who will monitor this? If there are asymmetric assumptions, robots with the more war-like codes will always defeat the ones with the more benign ones: so who gets to evolve a Geneva Convention, as it were, for war robots?