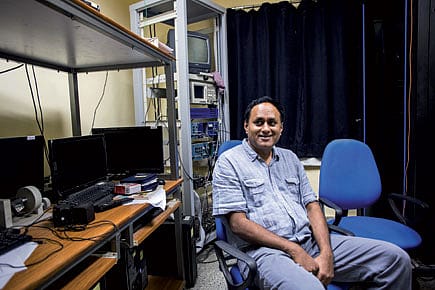

Aditya Murthy: The brain traveller

Aditya Murthy's lab reminds one of a beehive—with its occupants busy collecting pollen and nectar. Here, in a nondescript corner of the Indian Institute of Science (IISc) in Bengaluru, Murthy and his students spend hours formulating questions and designing experiments to understand the processes that occur in the brain in response to stimuli. His lab is housed within the Primate Research facility, where a maze of corridors and stairs leads you to the researchers at work.

Currently, the team is studying the science behind everyday actions that we often take for granted: answering the phone, picking up a cup of tea, or biting into an apple. They are interested in how we do what we do, the split-second movements that require a complex yet smooth coordination of our hands and eyes.

Neuroscientists across the world have been trying to answer the same question. However, Murthy, associate professor and cognitive neuroscientist at the IISc, has already come up with some new insights and research. Aided by a computational analysis of human participants, Murthy and his team have studied how visual information is processed by the nervous system and converted into motor behaviour. For certain tightly coupled tasks, they contend, there exists a precise and dedicated neuronal circuit that controls hand-eye movement.

What does that mean? Functionally, hand and eye movements are independent and distinct actions. The nature of control at the end point of each is very different. Eye movement is controlled by three muscles, while hand movements depend on 23. The only commonality is that the signal to initiate movements of either the hand or the eye originates in the higher brain or the cortex; it is this part that coordinates the complex interactions between the hand and eye (the motor and visual systems).

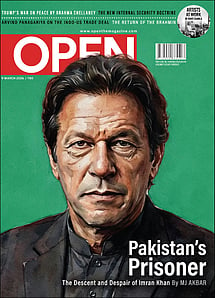

Imran Khan: Pakistan’s Prisoner

27 Feb 2026 - Vol 04 | Issue 60

The descent and despair of Imran Khan

Dressed in a T-shirt and jeans, Murthy attributes his success to asking the right questions. "Then the answers turn up automatically," he believes. It all started when, during his post-graduate studies in Macromolecules at the University of Mumbai, he came across a book, From Neuron to Brain by Stephen Kuffler (considered the 'Father of Modern Neuroscience') and another eminent neuroscientist, John Nicholls. The first few chapters had him hooked. Ever since then, he has been keen on understanding vision as closely as possible.

Murthy then went on to obtain a doctoral degree under the guidance of Allen Humphrey at the Department of Neurobiology, University of Pittsburgh. Here he worked on examining the neural mechanisms involved in the processing of motion in the visual system. Later, his post-doctoral training on primate visual -motor systems with Professor Schall at Vanderbilt University's psychology department furthered his understanding of the subject. In 2001, he moved back to India and joined the National Brain Research Centre at Manesar, Gurgaon, where he had the opportunity to spend a few days with Nobel Laureate Torsten Wiesel, whose work on information processing in visual systems won him the Nobel Prize in medicine back in 1981.

Murthy himself has spent many years studying visual systems, how brain mechanisms affect decisions on even the simple act of making a saccadic eye movement to a stimulus; a 'saccade' is a jerky movement of both eyes to redirect the axis of vision to a new location, and it involves what is known as 'foveal' or central vision. He has also studied the implicit memory of eye movements: how the eye remembers where to look. For instance, while searching for a person in a crowd, the eye doesn't return to spots already scanned, having already noted that the person isn't there.

Hand-eye coordination is believed to have evolved as an adaptation by early primates to insect grazing in an arboreal environment. In 1974, the American anthropologist Matt Cartmill suggested in his paper, 'Rethinking Primate Origins', that vision replaced olfaction as the dominant sense when early primates took to hunting insects in trees for survival and developed forward-looking eyes for better vision. Later, these primates began to use their forelimbs to reach out and grasp. This need led the evolution of hand-eye coordination.

Murthy explains that the action of reaching out is guided by vision. Central vision is more accurate than peripheral vision, so it makes sense for the hand to be 'foveated' by the eyes to guide one's reach.

To analyse this action of reaching out for an object, Atul Gopal PA, a doctoral student with Murthy, conducted a series of experiments on 20 men and four women with no neurological deficits between the ages of 25 and 28. To rule out variations due to external factors, the experiment was set up in a controlled lab environment. The enclosure had a test machine consisting of two horizontal glass panels perpendicular to each other, supported by wooden columns on either side. The lower glass panel had a screen attached by cables to a computer that was used to record and analyse the data. Each participant was seated in front of the computer screen with his or her chin on a chin-rest, the head clamped from above to minimise movement so that only central vision could be used. A sensor was strapped with Velcro on the forefinger to track hand position, along with a battery-powered LED for visual feedback. Each participant also wore an eye-frame attached with a high- speed camera to capture eye movements.

Readings were taken on separate days and in discrete blocks. On average, the team performed about 500 trials per session, with breaks after every 250. The experiment began with the participant placing a forefinger on a white fixation spot on the centre of the screen. A green light, the target, appeared on any one side of the fixation spot, and he or she had to quickly move the finger towards the light. This is the 'reaching out' phase of any hand action.

In other trials, participants were asked to perform a 'redirect' task. A second target appeared, and they had to move their forefinger towards this one instead of the first. The position of this target was the opposite of the first, but the participant had no way of predicting when, and if at all, the second target would appear. Consider a batsman who expects a particular type of ball from the bowler and prepares to play a particular stroke, but the ball shifts course and he has to replace his initial action with another.

To determine if control of a saccadic eye movement is influenced by control of the hand movement, Gopal also looked at 'reaction time' distributions. Reaction time is the time a person takes to respond to a target and includes the time taken by the brain to detect a target as well as that required to plan and initiate a movement. Consider the batsman again. If the ball bowled by the bowler is what he expects, his reaction time will be quick, but if it's different, he will be slower. Similarly, Gopal observed that as the time interval between the appearance of the two targets increases, the subject tends to make errors as he or she is already committed to take the first action and the reaction time is longer.

On average, it was found that the hand reaction time was 100 milliseconds longer than that of the eye. The variability in eye-alone and hand-alone reaction times, measured as standard deviation, was significantly different across subjects. But for the hand-eye condition, the standard deviations of the eye and hand reaction time were comparable. This unique signature, Gopal claims, can only be explained if both the hand and the eye are controlled by a single command.

Gopal further tested this hypothesis with electromyography (EMG) by attaching EMG electrodes on shoulder muscles to measure muscle activity. While the participants made their reaching movements, the EMG activity of the shoulder muscle was obtained. Interestingly, the onset of shoulder muscle activity typically occurred prior to saccade onset, even though the hand movement occurred after the saccade onset.

This is the first time that computational evidence of a dedicated pathway for hand-eye coordination has been recorded. Murthy envisages that his research can help with the design of sophisticated robots that mimic human action. But he conjectures that though robots can be very precise, they will not have the functionality of flexible or dynamic movements. He believes that this dedicated circuit is context dependent and only shows up for stereotypical tasks that do not require much decision making. For neuroscientists such as Murthy, such routine behaviours are the most interesting ones. As he says, "The magic is in the mundane."

(Deepa Padmanaban is a Bangalore- based science writer)