The Race for Smart Glasses

At a TED 2025 event in Canada, Google's head of augmented reality gave a display of the company's AR glasses. They looked like regular spectacles but could connect with the phone, scan documents, have an AI assistant on call, and do myriad things. This was a demonstration with a launch for the mass market still some way off. Meta has been the first mover on this front with its AR glasses already available through a collaboration with Ray-Ban. A lot of big players are however now lining up to dominate what promises to be the next big thing in wearables. Google is tying up with Samsung to come out with their glasses next year while Apple, which already has a very advanced but expensive and clunky Vision Pro virtual reality headset, is working on cheaper glasses for a wider customer base. According to a Bloomberg article, Apple's CEO Tim Cook is personally invested in the development of the glasses and sees it as the company's next big product. Meanwhile, Meta's Mark Zuckerberg, who already has a headstart, is developing another line of smart glasses, Orion, with advanced technologies like using hand gestures to do a range of activities from browsing to games.

A NEW SOCIAL MEDIA

OpenAI, the firm behind ChatGPT, is believed to be building its own social media platform, working on a prototype that involves a "feed" feature, according to reports. It may be built around the company's image-generation capabilities. It remains unclear if OpenAI will launch this social network as a standalone app or if it will be integrated within the ChatGPT app, although some have described it as an X-like social media network.

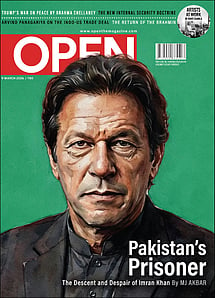

Imran Khan: Pakistan’s Prisoner

27 Feb 2026 - Vol 04 | Issue 60

The descent and despair of Imran Khan

ROBOT BOXERS

A video of the Chinese robotics company Unitree's compact humanoid robot mastering kung fu moves had gone viral last month. It has now posted a video of a humanoid model—the Unitree G1—fighting against a human boxer, followed by one where it faces another G. It has also announced a boxing event where it will have two humanoid robots fight each other.

DECODING DOLPHIN-SPEAK

Google has developed an AI model to help decipher the language of dolphins. Called DolphinGemma, this AI model has been trained on the Wild Dolphin Project's database of sounds from wild Atlantic spotted dolphins. It has been designed to identify the patterns and structure of a dolphin's sound, allowing it to "predict the likely subsequent sounds in a sequence", and maybe, even help scientists figure out what they're "saying".