The Damned Science of Psephology

WHILE WRITING ABOUT psephology, its failure, its success and challenges in the context of the recent round of assembly elections, I can't escape sharing my experiences of conducting pre-election polls, being at the Centre for the Study of Developing Societies. It is all the more important because at this moment, we are on the firing line, having ended up with an inaccurate projections for Uttar Pradesh and Uttarakhand, though our estimates were not so bad for Punjab and Goa, where the numbers were somewhat accurate. But yes, we failed to gauge the sense of the voters mood in UP and Uttarakhand completely, and we must accept this as our failure and introspect to find out what may have gone wrong with our polls. They captured the trend or mood of voters, but failed in measuring the intensity of the inclination for change. This raises questions of how accurate this method to gauge the mood of people is, and what may be required to make it more precise and useful, but by no means does this failure signal an end of psephology.

When a poll fails in making an accurate assessment, the first explanation offered by 'critics' is that one can't possibly measure the mood of such a large number of people by asking only small number of people some questions. This is basically a question about sample size. Those in the business of measuring voting behaviour—or any other public opinion using surveys— would never explain a failure by pointing to a relatively small sample or argue that they need a bigger one to make more accurate estimates.

Even though our recent estimates have been inaccurate, I would not put forth the excuse of having used too small a sample for our polls in all four states. Our sample size in UP was 6,500, Punjab 3,500, Uttarakhand 1,800, and in Goa it was 1,600. In the past we have conducted various polls with such small samples and got reliable estimates. So clearly, bigger samples are not the automatic answer to the question of why polls fail sometimes to forecast the results as well as one would have wished.

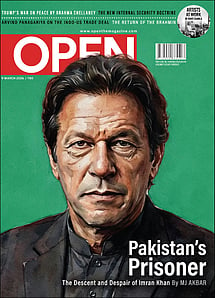

Imran Khan: Pakistan’s Prisoner

27 Feb 2026 - Vol 04 | Issue 60

The descent and despair of Imran Khan

Another question which is asked is whether the polls were inaccurate because the 'right kind of people' were not interviewed. At times, by that phrase they refer to educated, articulate and intelligent people. But for polls, the right kind of people are not all those who satisfy that description, but all those who actually turn out to vote on election day. What needs to be drawn is a representative sample of voters. Without one, getting forecasts correct is not easy. We at CSDS have always interviewed the right kind of people and got accurate results. Even in this round of surveys in four states, our samples were truly representative, but we sadly failed to measure the mood accurately. There must have been something on the ground which we could not capture. What this was, we can only speculate at this moment, but are unable to give a clear answer to the question. While the samples were representative with regard to proportions of groups in the population, it is difficult to say if they turned out to vote in equal numbers. Differential turnouts among communities that tend to vote differently may affect estimates, but there is no evidence to suggest that voters of some groups turned out to vote in much bigger numbers than those of others in a manner that may have had an impact on the vote estimates. In polls, we work on the assumption that communities will turn out in proportion to their share in the population. This method has measured voting patterns fairly accurately many times in the past. Further analysis of this and introspection need to be done before anything is said on this issue.

Critics also argue that voters are very smart, that they do not tell the truth when asked of their voting intentions, and this is why the polls get inaccurate results. There may be some truth in this, particularly if such questions are asked in the open, but it is important not to exaggerate the effect. I fail to understand why people would lie about their voting preferences. They might prefer not to answer the question at all, but blaming voters for inaccurate estimates may not be justified. We do guard our methodology against the possibility of false responses (when asked in the open) through the provision of dummy ballot sheets—with the names and symbols of all candidates and political parties in the fray in respective constituencies—and the use of dummy ballot boxes for people to 'cast' their vote. This method has worked reasonably well in polls which we have conducted over the past few decades.

PEOPLE AND ORGANISATIONS are remembered by their successes, but when it comes to polls, they are somehow remembered more for their failures. Some critics are so obsessed with the notion of polls having 'always failed' that I was told that even our Manipur poll was inaccurate; I had to humbly point out that the CSDS did not conduct any polls in Manipur. But the perception problem does not stop here. After more than a decade, people still have memories of CSDS's 'inaccurate forecast' of the 2004 General Election, even though the organisation did not make any forecast at the time; we did conduct a post-election poll, however, to analyse the results.

I would not say that polls do not fail. They do, like we have failures in many other fields, but sadly, people still jump to conclusions of all polls having failed time and again without even bothering to look at the track record. This is similar to the belief among some sections of Indians that Muslims have outnumbered Hindus even without looking at the Census data.

Do we come across people saying India has lost a cricket match without looking at the scores of the teams? We usually don't. In cricket, we tend to cite figures of success and not failure. Ask someone of an older generation how many centuries Sunil Gavaskar scored, and many of them would give you the exact number. Now ask someone a generation younger how many centuries Sachin Tendulkar or Virat Kohli has scored, or how many double centuries; I doubt cricket fans would make any mistake. Ask the same people how many times Gavaskar scored a duck or failed to score a decent number of runs, and hardly anybody would remember. The same would apply in the case of Tendulkar and Kohli, or any other player. But psephologists are remembered more by their failures than successes.

I am sure our CSDS inaccurate assessment of elections in UP and Uttarakhand will stay in memory longer than our somewhat decent figures for Punjab, and Goa. Also, for UP, nobody would bother to look at our estimates for the 2012 and 2007 Assembly elections, nor would anybody look at our figures for Punjab's 2012 Assembly elections or Assam's and West Bengal's in 2011. (We did not publicly release our estimates for Assam and West Bengal before the results during the 2016 Assembly elections.) Our estimates for Bihar's 2010 Assembly elections were spot on, and again, we did not make our figures for this state's 2015 elections public.

In all, the list of accurate estimates is much longer than critics might imagine. This, however, is not the time to boast about that, for we must understand what went wrong this time and how it can be fixed. We also need to ask ourselves if these polls are merely to estimate which party will win how many seats and what percentage of votes each party will get. These polls serve a much bigger purpose of helping us understand the trends and patterns of elections, the issues, and the changes that may be taking place in Indian politics.

A knee-jerk dismissal of psephology would hardly help. Like other fields, it has inherent limitations. Remember, it tries to capture human behaviour. Even if we use a thermometer to measure the temperature of someone with fever, there are chances that its readings will differ from point to point even if taken at quick intervals. Likewise with some other machines that measure levels of blood sugar or pressure. If machines fail to take precise measurements, imagine the difficulty of taking a close reading of electoral behaviour. But yes, if a thermometer gives erratic readings, we need to replace it. And if polls fail more often than expected, it may not be inappropriate to think seriously about the utility of its method in the changing political scenario.