Ashwatthama Is Dead: On the Theatre of Lies in Wartime

At some point during the 11th day of the Kurukshetra war, when the earth was already red with moral compromise and cavalry blood, something unutterably modern happened. The Pandavas told a lie. Or not quite a lie. A half-truth, sharpened at the edges. They killed an elephant named Ashwatthama and declared, "Ashwatthama is dead." Dronacharya—teacher, tactician, and possibly the most psychologically implausible character in the Mahabharata—refused to believe his son had been killed. So he asked Yudhishthira, who had never lied in his life. "Yes," said the son of Dharma, "Ashwatthama is dead." And then, under his breath, "…the elephant."

That whisper, factual, strategic, devastating, is the urtext of wartime misinformation. It was a statement engineered to bypass reason and go straight for the kill. Drona laid down his weapons. Seconds later, he was killed. In the centuries since, the sentence has grown in sophistication, in reach, in velocity. It has learned new accents: the loudspeaker, the forged document, the typewritten press release, the burner account, the AI-generated satellite image. But the structure has barely changed. A kernel of truth. A silence. A body, or several.

Take the Gleiwitz incident, one of several staged attacks used by Nazi Germany to justify the invasion of Poland. August 31, 1939. A German radio station near the Polish border is "attacked" by Polish soldiers—who turn out to be SS men in costume. A corpse is left behind to make it look real, and the next morning Germany invades Poland. The fake attack becomes a real one. The war begins not with a bang but with a script. The only thing more chilling than the lie is how little time it needed to work.

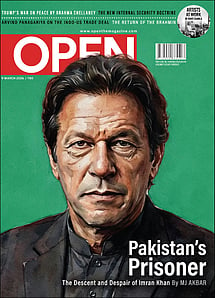

Imran Khan: Pakistan’s Prisoner

27 Feb 2026 - Vol 04 | Issue 60

The descent and despair of Imran Khan

Misinformation rarely needs longevity—only momentum. The goal is never to be believed forever. Only long enough to act.

The Americans understood this. Operation Cornflakes was a clever scheme devised in 1945 that involved dropping fake anti-Nazi mail into bombed German postal trains. While inventive, it had limited reach and little documented impact on public morale or wartime outcomes. Historians regard it as a footnote in the Allied propaganda playbook—ingenious, but largely symbolic. By the Cold War, misinformation was elaborate and stylised. Take Operation NEPTUN in 1964, for example. Czechoslovakia's secret service, with KGB guidance, planted fake Nazi documents in a lake. Divers "discovered" them. News outlets bit the bait. West German politicians were tarred with fascist guilt. The operation succeeded domestically in bolstering anti-Western sentiment, even if it had limited international traction. What we now call disinformation began then to perfect its art of truth-shaping. And the enemy was no longer just the foreign army. It was uncertainty itself.

In Stalinist Russia, foreign correspondents were kept on the third floor of the Hotel Metropol in Moscow, fed censored press releases and shadowed by handlers. They typed their dispatches on typewriters provided by the state. Everything was surveilled. Nothing was spontaneous. These were not journalists. They were stenographers of what the Kremlin needed the West to see.

If truth under Stalin was curated absence, under Saddam Hussein it became a haunted surplus—too many horrors, too little verification. Before the 2003 Iraq war, tales emerged of Hussein feeding dissidents into industrial shredders. Feet first, to prolong the agony. The story was recounted in parliamentary speeches, media interviews, op-eds. But the sources were always vague—refugees, defectors, anonymous intelligence officials. No photographs. No names. No forensic evidence. Years later, journalists found no confirmation. And yet, at the time, the horror was viral. It added moral heat to a war already simmering. And in that way, it succeeded.

In 1990, during the Gulf War, a 15-year-old Kuwaiti girl testified before a US Congressional Human Rights Caucus that Iraqi soldiers had ripped premature babies out of incubators in a hospital in Kuwait City. The world wept. The footage spread. Months later, it emerged that the girl was the daughter of Kuwait's ambassador to America, and that the story had been engineered by a public relations firm hired by the Kuwaiti government. But by then, the war had already begun. It turns out a single cry can drown out ten corrections.

The Yugoslav wars weaponised even the imagination. Serbian media outlets circulated a story that Croatian forces had massacred children in Vukovar. Though investigations found no evidence of such a massacre, it triggered retaliatory rhetoric and possibly, attacks. That no children had been killed mattered less than the image of them dying. The rumour became a placeholder for rage. Misinformation doesn't have to be consistent with history. It only has to be consistent with pain.

In 2019, after the Pulwama suicide bombing that killed 40 Indian paramilitary personnel, India responded with an airstrike on Balakot in Pakistan. What followed was not just a military escalation, but a media war of biblical proportions. An Indian pilot—Wing Commander Abhinandan Varthaman—was captured after his plane was downed in Pakistani territory. A video of him calmly sipping tea while in custody surfaced. The gesture was undeniably humane. But the video's release was timed to the minute, saturating social media just as anti-Pakistan sentiment in India was peaking. It became a public relations coup. States had begun to use virality as policy.

And then the more surreal images began to surface: photographs of fallen soldiers mislabelled; AI-generated visuals of cities being bombed; news anchors reading made-up statements; deepfakes of enemy leaders blinking in loops of artificial contrition. The war no longer had a single front—it had feeds.

Which is what made May 2025 feel like déjà vu wearing newer shoes. Cross-border artillery fire erupted across the LoC. Indian drone footage showed strategic strikes on militant launchpads. Pakistan issued immediate denials and released its own footage. There were claims of civilian casualties, which were then retracted, then reasserted. On the third day, both sides agreed to an urgent ceasefire. But by then, the internet was ablaze. A second war unfolded online. A video claiming to show Indian soldiers abandoning their posts spread rapidly, shared by accounts eager to score points. It was fake—an old clip doctored and repackaged. Around the same time, a graphic styled to look like it came from CNN claimed India had suffered more losses than Pakistan. CNN denied ever making it, and India's Press Information Bureau confirmed it was fabricated. Another rumour said Pakistan had destroyed an Indian S-400 missile system, a claim also debunked. The lies travelled faster than the corrections. By the time the truth caught up, the damage was already done.

Misinformation today is not just a strategy. It is the culture of war. A war without punctuation. A permanent hum of emotional mobilisation. What the Mahabharata knew—and what we keep forgetting—is that the most effective lie is the one that sounds like the truth. Ashwatthama is dead. Long live the elephant.