Face Value

Facial recognition technology has come of age but is it experiencing a glitch in India?

Lhendup G Bhutia with Madhavankutty Pillai

Lhendup G Bhutia with Madhavankutty Pillai

Lhendup G Bhutia with Madhavankutty Pillai

|

24 Sep, 2021

Lhendup G Bhutia with Madhavankutty Pillai

|

24 Sep, 2021

/wp-content/uploads/2021/09/Facevalue1.jpg)

(Illustration: Saurabh Singh)

FOR ABOUT TWO YEARS, A GROUP OF RESEARCHERS from Gwalior’s ABV-IIITM (Atal Bihari Vajpayee-Indian Institute of Information Technology and Management) would move around the campus trying to convince fellow students and staff members to pose in front of a camera. It wasn’t an easy task. The subjects were required to spend long hours in a studio under the glare of powerful lights and make faces at the camera, and sometimes return for the same tasks another day. “But you see, it was very important that we did it correctly,” says Karm Veer Arya, a professor of computer science at the institute and one of the researchers. “It could help solve a major pain point for facial recognition in India.”

For facial-recognition algorithms to work well, they must be trained and tested on large datasets of images. A facial recognition algorithm made using American faces might not work very well here. According to Arya, there just aren’t enough datasets with Indian faces. By sometime in 2019, Arya and his team of researchers had painstakingly prepared a dataset of 1,928 images. Consisting of 107 participants—each one carefully chosen so that they not only belonged to diverse geographic and racial groups in India but also sported diverse looks, from whether they wore glasses or not, had facial hair or not, to the way they dressed—they had to express a different emotion in every image and were photographed from different angles. The researchers also created an additional dataset, where the background of the photos from the earlier set was replaced by more complex scenes, such as a railway station or a beach with many faces behind, so that a facial recognition technology (FRT) system could be trained or tested using a database that more closely resembled the world outside their studio. A total of 1,928 images may not be much but creating such a dataset of ordinary Indian faces—and more that will come over time—Arya believes will make facial recognition more accurate in India. “Otherwise it will never be foolproof,” he says.

One of the first and largest local Indian databases was made using photos of movie stars about eight years ago by researchers of the Centre for Visual Information Technology (CVIT) at IIT Hyderabad. Vijay Kumar, who was then a PhD student there, led a team of 10 to create what became the Indian Movie Face Database. It had 34,512 mugshots of 100 actors from 103 movies. Initially, Kumar had wanted to work on how facial recognition worked with Indian subjects but soon realised that there was almost no database of people here. Most of the research then was happening in universities abroad which were using faces from movies, sitcoms, etcetera from there. The CVIT team made sure there was diversity in appearances, gender and other factors to give an all-India representation, cutting across states. It was painstaking manual work over two to three months. They labelled each of the more than 30,000 images, annotating them with six expressions, pose, age, features like beards and glasses, illumination, gender and more. The Indian Movie Face Database is used across the world now for research on facial recognition related to Indians.

Arya says only through the creation of more local databases can FRT be made accurate in India. “Unless we build large and diverse datasets, the system is not going to be perfect,” he says.

Facial recognition is already a visible aspect of many of our lives. It is on our mobile phones, saving us from remembering complicated patterns to unlock them. It is there on Facebook, prompting us to tag ourselves to photos of long-forgotten parties that someone unknown to us had posted online. It is there on the mobile apps of Google, Apple and the rest, helping us compile albums of people we take photos with. In India, as in many other parts of the world, the technology of reading a person’s face is quickly spreading in awe-inspiring and frightening ways. A rapidly spreading network of closed-circuit cameras in public areas like train stations and airports is being hooked up with FRT. Law-enforcement agencies are taking photos of suspects to find out if they have a criminal record. We know that it was used during the riots in Delhi last year, and, according to Union Home Minister Amit Shah, over 1,000 arrests were made based on it. Missing children are being looked up, and in some cases, found. The Indian Army is using it for counter-terrorism. FRT is being installed at some airports to enable us to board planes without a physical boarding pass. There are proposals to use it to verify the identity of pensioners and check pilferages in the distribution of foodgrain through the public distribution systems. It is being used in some government schools in Delhi, to mark attendance in some offices of the National Thermal Power Corporation, and was even used at some vaccination sites in Jharkhand as part of an Aadhaar-based facial recognition system. The NGO Internet Freedom Foundation, which has been tracking the use of this technology, has so far found that there are at least 75 programmes wherein various government agencies have either incorporated the technology or are in the process of doing so. “That’s just what we have found. I would think there would be many more which are still secret,” says Anushka Jain, Associate Counsel (Surveillance and Transparency) at the NGO Internet Freedom Foundation.

A rapidly spreading network of closed-circuit cameras at train stations and airports is being hooked up with facial recognition technologies. Police are taking photos of suspects. Missing children are being looked up, and sometimes, found. The army is using it for counter-terrorism. FRT is being installed at some airports to enable us to board planes without a physical boarding pass

Appetite for the technology is growing rapidly, both among government and private clients. According to TechSci Research, an Indian market analysis firm, India’s domestic facial recognition industry is projected to grow (from $700 million in 2018) to $4.3 billion by 2024.

Vijay Gnanadesikan, whose FaceTagr is among the most popular facial recognition systems currently in use in India, initially thought he could use this emerging tech in the retail space. “The reason brick-and-mortar retailers are lagging behind e-commerce websites is because they don’t have the kind of data e-commerce has. Data like who is coming, what are they spending time on, what products interest them. Back in 2016, we thought with computer vision and facial recognition technology, we could give that kind of e-commerce analytics to brick-and-mortar retailers,” Gnanadesikan says.

Towards the end of that year, after being moved by the sight of a destitute girl begging on the street and collating public databases of missing children and using this technology to reunite them with their families, Gnanadesikan began to be approached by government agencies. “We were not into police at that time. But everyone (from the police) said that this is such a great technology. That we have to work with the police,” he says. FaceTagr is currently used by the police in two states and a Union territory, with a third state currently trying it out as a pilot project. Others, such as the South Western Railway, employ it to check child trafficking (at its railway stations) and reunite missing children with their families, and the Army too uses it for counter-terrorism.

There are proposals to use FRT to verify the identity of pensioners and check pilferages in the PDS. It is being used in some government schools in Delhi, to mark attendance in some offices, and was even used at some vaccination sites in Jharkhand as part of an Aadhaar-based facial recognition system

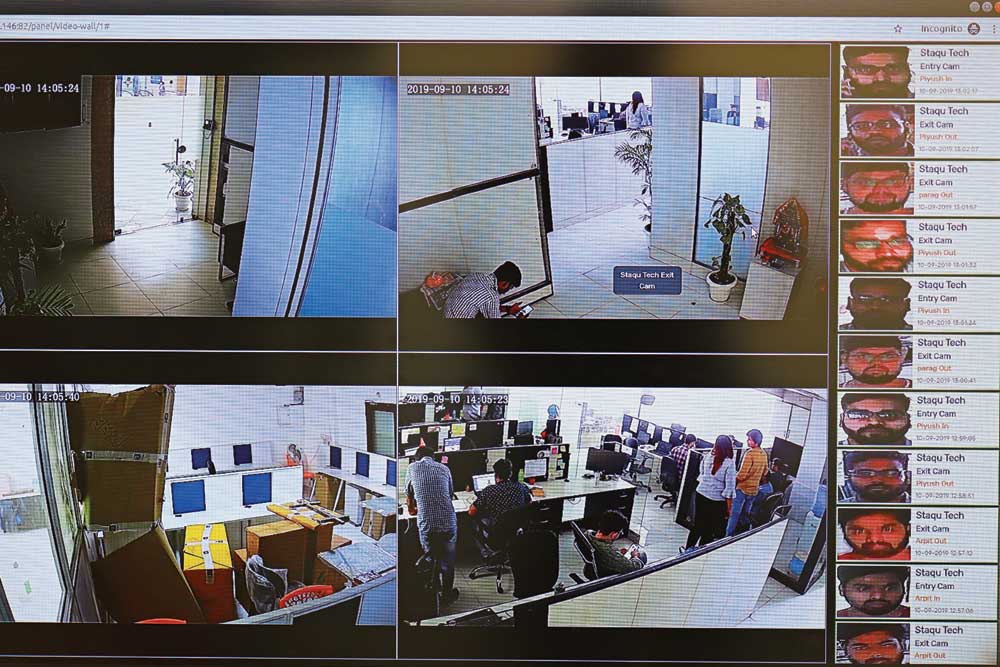

Law enforcement agencies use Gnanadesikan’s system in two ways. The first is by applying the technology across a network of CCTV cameras. The other, and more popular way, is in the form of a mobile app linked to the police’s database on criminals. “Say, someone suspicious is walking on the streets at 2AM. What they had to do earlier was to lead them to a police station, take fingerprints and wait for 24 to 48 hours for the results to come to know who this person is. Now, all the policeman needs to do is to take a photo on the app. And in less than a second they get the result. If there is a match (with the database on criminals) they can investigate,” he says.

The app also provides a confidence score, the system’s way of telling the policeman how confident it is that this person is someone on a criminal database. To ensure the policeman does not blindly rely only on this app, they have ensured that the score is reduced. “Even if it is really 99 per cent sure, we have kept it slightly on the lower side, so that the policeman using it does a thorough check on his own. Usually 80 per cent or higher means they are probably the same person. This also helps pull up siblings as well,” Gnanadesikan says. “And it comes most handy with (missing) children. Because children grow fast, their faces also change. We don’t claim this is the same kid (with a match on their database with a relatively low score), but if a child photographed is pulling a match with two photos from (a database of) six lakh photos, then it is for a reason and it needs to be checked out.”

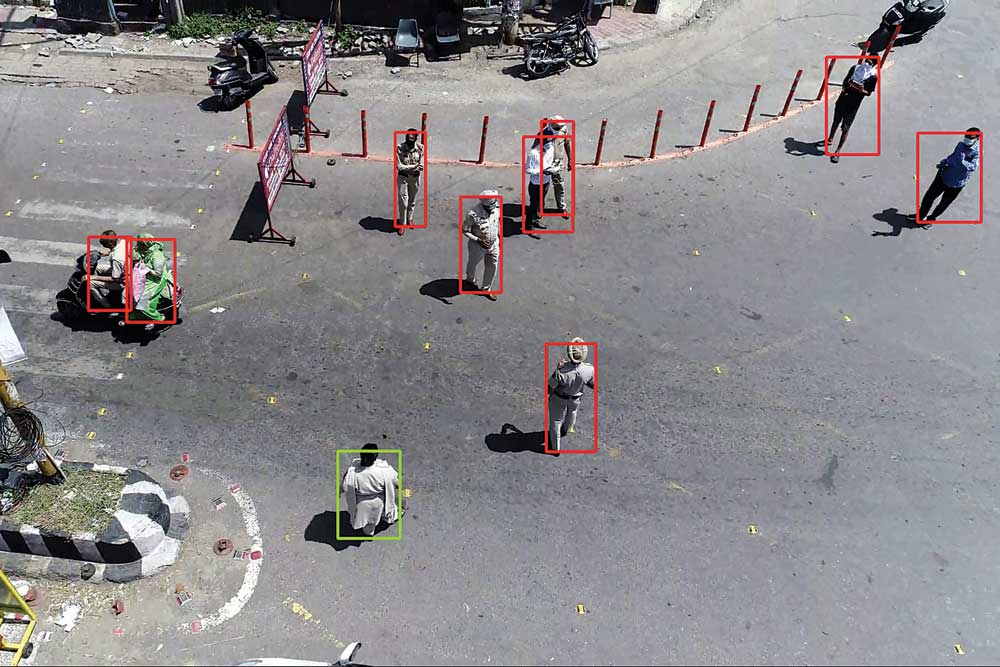

San Francisco-based startup Skylark Labs, which offers many FRT services in India, has been working on several hurdles such a technology faces. While research work has earlier shown that it is possible to detect disguised faces, the firm can also get algorithms to decode faces in crowded spaces like those in India’s urban centres. They have also developed a drone-based artificial intelligence (AI) system whereby, analysing the postures of people fighting on the roads or the presence of suspicious items like unattended bags or weapons through its cameras mounted atop drones, alerts can be sent out to the police. This system was modified and used in several cities in Punjab by the police last year to get alerts about individuals breaking lockdown regulations.

FRT is also increasingly being used in the private sector. Innefu Labs’ system is currently being used, its co-founder Tarun Wig says, by banks in the filing of KYCs (Know Your Customer) and loan fraud detection, and also as a way to register employee attendance. Gnanadesikan’s FaceTagr is often used for exhibitions, where the faces of the attendees serve as a ticket, for attendance and visitor management in office buildings, and also in retail outlets to learn, for instance (without identifying an individual), how many of those who visit a store return in another week. “Right now, FRT is very big among law enforcement agencies. But over time its use is going to grow a lot in the private space too,” Gnanadesikan says.

The accuracy of FRTs has been found to be far from the same across gender and racial groups. According to its detractors, FRT reflects the conscious and unconscious biases of those who created the systems. Fears about FRT’s inaccuracy and its rapid adoption has led many to fear that innocent individuals could be incarcerated or left out of welfare measures

BUT HOW ACCURATE is the technology in India? In recent times, the accuracy of FRTs has been found to be far from the same across gender and racial groups. One project in the US, titled Gender Shades, has shown that dark-skinned females are far more likely than light-skinned males to be misidentified by commercial facial-analysis systems. Another test found Amazon’s Rekognition erroneously matching 28 members of the US Congress with individuals on a database of 2,500 publicly available mugshots; these matches included a disproportionate number of members of the Congressional Black Caucus.

One would assume facial recognition algorithms would be neutral, but according to its detractors, it reflects the conscious and unconscious biases of the people who created and trained them. Earlier this year, in a paper titled ‘1.4 billion missing pieces? Auditing the accuracy of facial processing tools on Indian faces’, Smriti Parsheera and Gaurav Jain, two researchers who work on public policy research, tried to find that out by testing the accuracy rate of four popular facial recognition systems (Microsoft Azure’s Face, Amazon’s Rekognition, and Face++, and that of the Indian company FaceX) on Indian faces. To better reflect the diversity of faces in the country, they tested the four tools on the public database of election candidates from the Election Commission of India’s website during a certain period (a total of 32,184 faces).

The results were far from satisfactory. There were varying error rates in the face detection, and gender and age classification functions. The gender classification error rate for Indian female faces was consistently higher compared to that of males—the highest female error rate being 14.68 per cent. Despite taking into account an acceptable error margin of plus or minus 10 years from a person’s actual age, age prediction failures were in the range of 14.3 per cent to 42.2 per cent.

“For most privacy researchers, facial recognition technology raises immediate red flags due to its ability to be deployed without the knowledge or consent of the individual and the scale at which this can interfere with civil liberties. My first point of interest was also from a privacy and civil liberties perspective. The widespread adoption of facial recognition technologies in India coupled with unanswered questions about how well the technologies work and for whom is what prompted me and my co-author Gaurav Jain to take up the research,” Parsheera says.

AFTER THE PUBLICATION of studies that showed the biases within these systems, such as the Gender Shades study, follow-up studies revealed improvement. But Parsheera and Jain’s paper shows these improvements did not translate to an Indian setting. “We found that one of the tools, Face++, presented a gender classification error of almost 15 per cent for Indian females as compared to the 2.5 per cent error rate generated by the same tool in an earlier study involving images of persons from other nationalities,” she says.

According to her, there is a general lack of transparency on what goes into the design and training of facial processing models which makes it difficult to pinpoint the specific reasons for the observed inaccuracies. “One could, however, venture to suggest that the lack of adequate representation in the training datasets could be a key reason why such tools may perform better for certain demographic groups, such as young white males, compared to others. Therefore, if people from a certain demographic group, which could be demarcated on the basis of gender, age, region, caste, tribe and several other identifiers, are not represented in the data used for training a particular system, the chances of errors for those groups become higher,” she explains.

The paper also points out the lack of India-specific facial datasets. Most of them usually include only a limited number of unique individuals. “Covered subjects are often university students, volunteers from major cities or movie celebrities. Some Indian faces also find a place in popular fair computer vision datasets but are generally grouped under the broader category of South Asian faces,” Parsheera and Jain write.

Divij Joshi, a lawyer and researcher, says that while there is little transparency on the accuracy of FRTs in India, the little that has come out in the open suggests that it isn’t very good. He points to reports of documents submitted to the Delhi High Court by law enforcement authorities using FRT to identify missing kids, which claimed the system operated at an accuracy rate of 2 per cent before dropping to 1 per cent in 2019, and the Ministry of Women and Child Development revealed that same year that it failed to distinguish between boys and girls. According to Joshi, while several companies, including those in India, claim their accuracy rates have been tested against international benchmarks such as the NIST (US standards-setting body), a high score there may not necessarily mean they will be able to replicate similar high accuracy rates on Indian streets. “Benchmarks such as NIST don’t necessarily work because they are known to have discrepant performances across different demographics,” he says.

Because of the privacy and fairness aspects of the technology, globally many companies are becoming concerned about its use. For instance, Microsoft, Amazon and others are pulling back their technology from police and law enforcement. Vijay Kumar, who led the Indian Movie Face Database creation, says, “All of this happened due to racial and diversity biases in the datasets. Algorithms tend to work well only on a subset of demography, age group, race, etcetera. This is one of the major open problems that need to be addressed before the technology can be widely used in critical-use cases. One direction to address both fairness and privacy is to systematically generate synthetic (fake) diverse facial images (people) which can then be used to train face recognition models.”

According to those involved in FRT, accuracy in the real world is something no one can measure. “In a controlled environment using the NIST framework, I can say we have an above 95 per cent accuracy. But if you put the cameras on Chandni Chowk and tell me to identify 100 people, where only the hair is visible in some cases, what can I do?” says Wig. Gnanadesikan has heard about the varying error rates across different demographic groups. At least two of his clients, one of which is the police system in a foreign country currently trialling FaceTagr, have reported how their previous FRTs often failed to read dark-skinned individuals.

The fears about FRT’s inaccuracy and its rapid adoption throughout the country has led many to fear that innocent individuals could be incarcerated or left out of public welfare measures. There is also apprehension about the state misusing it to erode civil liberties and target detractors. What has spooked most activists is the proposal by the National Crime Records Bureau to create a National Automated Facial Recognition System that would create a national database from existing databases.

“Right now, there is no transparency on how it is being used or for what. You can’t even say it is being misused because there is no law that is there as a safeguard,” says Jain from the Internet Freedom Foundation, which has started a programme titled Project Panoptic, through which it files RTIs to uncover information about these projects and tries to create awareness through an online dashboard tracking these projects. Joshi points out how, during the recent protests, such as those against the Citizenship (Amendment) Act and those in support of farmers, policemen often used their mobiles to photograph even peaceful demonstrators so that these could be analysed through facial recognition technology.

Wig does not like to get drawn into the issue of misuse. “Look at a city like Delhi. There are so few policemen for a population of about two crore. What FRT can do is take the 95 per cent of that population who are law-abiding citizens out of the equation,” he says. “It is a tool, right? You can use it for good or bad.”

/wp-content/uploads/2025/07/Cover-Shubman-Gill-1.jpg)

More Columns

Shubhanshu Shukla Return Date Set For July 14 Open

Rhythm Streets Aditya Mani Jha

Mumbai’s Glazed Memories Shaikh Ayaz