Damned by Deepfakes

IT TAKES A CELEBRITY to make a malicious technology that has been in existence for years become real to Indian society. An X (formerly Twitter) user posted two videos which were exactly the same, of a woman entering a lift. Except that one of them had the face of the actress Rashmika Mandanna, who is extremely popular in south India but also became known in the rest of the country after the movie Pushpa: The Rise became a blockbuster. The real video was of a British influencer called Zara Patel. The one with Mandanna was a fake created using artificial intelligence (AI) tools, and the label by which this phenomenon goes is called deepfake. The person who posted it did so to create awareness about how prevalent deepfakes were and the objective was met. The post going viral led to comments from other celebrities which lent further fuel to its publicity. Amitabh Bachchan, who had acted along with Mandanna in a movie, posted: "Yes, this is a strong case for legal." Mandanna herself put up a post on X voicing her anxiety, stating that it was "extremely scary" how the misuse of technology is making people vulnerable and that while she herself had a support system of friends and family to deal with such an issue, if such a thing had happened to her when she was in college, she would have been devastated. "We need to address this as a community and with urgency before more of us are affected by such identity theft," she wrote.

The government responded as the issue snowballed. Rajeev Chandrasekhar, Union Minister of State for Electronics and Information Technology, put up a post on X that said: "Under the IT rules notified in April, 2023 – it is a legal obligation for platforms to ensure no misinformation is posted by any user, and ensure that when reported by any user or govt, misinformation is removed in 36 hrs. If platforms do not comply with this, rule 7 will apply and platforms can be taken to court by aggrieved person under provisions of IPC. Deep fakes are latest and even more dangerous and damaging form of misinformation and needs to be dealt with by platforms." As per media reports, this was followed by the government telling social media platforms like Instagram that deepfakes needed to be taken down within 24 hours when they received a complaint.

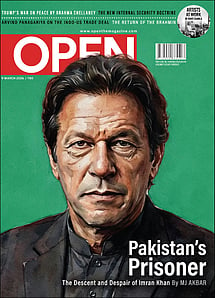

Imran Khan: Pakistan’s Prisoner

27 Feb 2026 - Vol 04 | Issue 60

The descent and despair of Imran Khan

And yet, despite all the alarm and anxiety, even though the responsibility has been thrust onto the platforms to ensure the policing of deepfakes, it is not a problem that is going to be solved anytime soon. Deepfake is even worse than fake news; it is fake reality, and as the technology improves, it is going to get even better at what it does. Right now, there needs to be a large number of images and videos of the victim for the programme to make a good impersonation. This is why people in public life, like movie stars and politicians, are the easiest to make deepfakes of because there are so many images of them from every angle possible that the programme can morph into another body.

But, eventually, as AI gets better, this won't be a limiting factor, and then anyone and everyone will be a potential victim. Mandanna's fear of a college student being a victim could soon come to pass. Every jilted lover, every man or woman who has a quarrel with a neighbour or who dislikes someone in the office, will be able to create deepfakes ranging from pornography to confessions of crimes.

After the Mandanna incident, the government restated that the creation of deepfakes could lead to three years in jail. But law by itself is usually never enough to stop something that is just a download away. One of deepfake's biggest uses is in pornography and that is where it even got its name. It came into public consciousness in 2017 when a user of the online forum Reddit named "deepfakes" started posting pornographic images of celebrities using the technology.

Something that really triggered interest in it was the following year when Buzzfeed released a fake video of former US President Barack Obama giving a talk in which he was warning against such impersonations. They did it using technology that was freely available online and a Buzzfeed article that said it took 56 hours and a professional to make it, but big dangers lay ahead: "So the good news is it still requires a decent amount of skill, processing power, and time to create a really good 'deepfake'. The bad news is that [with] the lesson of computers and technology, this stuff will get easier, cheaper, and more ubiquitous faster than you would expect—or be ready for."

It has taken five years since then for deepfakes to enter the mainstream consciousness of India, and much of it has to do with what Buzzfeed had predicted—the technology has progressed. There is now even a word for it—cheapfakes, done with comparative ease. A New York Times article in March spoke about it: "Making realistic fake videos, often called deepfakes, once required elaborate software to put one person's face onto another's. But now, many of the tools to create them are available to everyday consumers — even on smartphone apps, and often for little to no money. The new altered videos—mostly, so far, the work of meme-makers and marketers—have gone viral on social media sites like TikTok and Twitter. The content they produce, sometimes called cheapfakes by researchers, work by cloning celebrity voices, altering mouth movements to match alternative audio, and writing persuasive dialogue."

The Mandanna video would probably be an example of such a cheapfake given that close observation of it could still give hints of it being a fake. As the user who put out the video himself had written: "From a deepfake POV, the viral video is perfect enough for ordinary social media users to fall for it. But if you watch the video carefully, you can see at (0:01) that when Rashmika (deepfake) was entering the lift, suddenly her face changes from the other girl to Rashmika."

The universe of deepfakes keeps expanding. There are now not just impersonations of looks but also audio fakes, where, like mimicry artists, the programme puts words into a person's mouth. This is prone to be misused against politicians and leaders whose public statements can have damaging effects. The onus on countering deepfakes has fallen on the big tech companies like Google and Meta, which owns Facebook and Instagram, and they are trying. They have begun to insist on transparency for those who create non-malicious content with such tools.

The problem will become more acute in the immediate future because there are many important elections in countries like the US and India. Meta has just announced a policy that any use of deepfake in political advertisements must be openly stated. AI is also being used to weed out deepfakes. But the genie is increasingly getting out of the bottle and it doesn't look like it will go back easily.