Digital Sovereignty

IN THE NOT-TOO-DISTANT FUTURE, AN ARTIFICIAL superintelligence (ASI) will emerge that will punish those of us humans who did not help to bring it into existence, who knew it could be created but did not contribute to that end. That summarises an elaborate argument based on propositional logic that turns probability into rational or reasonable expectation, dispensing with statistics or frequency of occurrence, to bank on hypotheses, which can never be proved one way or the other. This particular belief about the ASI is called Roko's Basilisk, referring to the basilisk that could turn people into stone with its gaze.

In Berkeley, California, is a group calling itself the Rationalists that has been talking artificial intelligence (AI) long before it became a household term, long before the advent of ChatGPT. This group reportedly works behind the scenes although not quite clandestinely, and much of Silicon Valley is beholden to what it says. Some Big Tech super bosses are reportedly adherents of Rationalist thought, which has turned into a sort of secular or pseudo religion. They are supposed to be good people because their faith in AI ultimately working for the greater good is premised on the condition that AI does not annihilate us first. Rationalists or Rationalist-linked board members of OpenAI were allegedly responsible for Sam Altman's brief sacking as CEO because they smelt evil in his vision of AI, the kind that would not help humanity in the end.

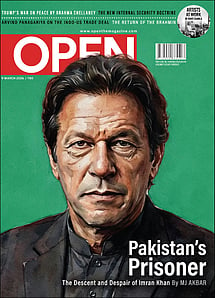

Imran Khan: Pakistan’s Prisoner

27 Feb 2026 - Vol 04 | Issue 60

The descent and despair of Imran Khan

Evil in the form of AI is blowing across the world on an "easterly wind". One example will suffice.

Recently, Vanderbilt University's Institute of National Security came into possession of a large cache of documents showing how a Chinese company called GoLaxy has emerged as "a leader in technologically advanced, state-aligned influence campaigns, which deploy humanlike bot networks and psychological profiling to target individuals."

Writing in the New York Times on August 5, Professors Brett J Goldstein and Brett V Benson of Vanderbilt who specialise in international security, said, "With the exponential rise of generative A.I. systems, the greatest danger is no longer a flood of invective and falsehoods on social media. Rather, it is the slow, subtle and corrosive manipulation of online communication—propaganda designed not to shock, but to slip silently into our everyday digital discussions. We have entered a new era in international influence operations, where A.I.-generated narratives shift the political landscape without drawing attention."

The line between surveillance and propaganda has been blurred and Russia's social media operations targeting the US elections or West Europe—or indeed the infamous St Petersburg "troll factory"—are now passé. The Chinese are changing the game.

GoLaxy, closely tied with and funded by the state, including the US-blacklisted company Sugon run by the Chinese military, uses a technology called GoPro which is basically a Smart Propaganda System (SPS). It was deployed in the Taiwan election campaign and is being used to sanitise Hong Kong and its pro-democracy instincts. But GoLaxy is also, according to the documents jointly analysed by Vanderbilt experts and NYT investigators, expanding its operations to the US, collecting data on Congressmen and Americans of consequence. Officially, GoLaxy denies it all. But in its pitch to the Chinese government, it boasts a lot.

Messrs Goldstein and Benson write: "What sets GoLaxy apart is its integration of generative A.I. with enormous troves of personal data. Its systems continually mine social media platforms to build dynamic psychological profiles. Its content is customized to a person's values, beliefs, emotional tendencies and vulnerabilities." The danger is that "A.I. personas can then engage users in what appears to be a conversation—content that feels authentic, adapts in real-time and avoids detection. The result is a highly efficient propaganda engine that's designed to be nearly indistinguishable from legitimate online interaction, delivered instantaneously at a scale never before achieved."

The company and the Party believe that they will prevail over the democratic West—in telling "China's story", in "amplify[ing] China's voice and expand[ing] China's influence, as well as providing comprehensive technological support to quickly make 'the easterly wind overpowering the westerly wind' a reality." The only problem is that there is a lot of conceit here but not a lot that shows it is going to work. Not yet. But that does not mean the danger of synthetic content and silent manipulation is not real.

As language and data are served up at the altar of AI to do with them as it pleases, most human activity on the planet will soon be the happy hunting grounds of the algorithm. From IoT to school essays, office emails, research and policymaking, execution of government programmes, manufacturing, driving, arms and wars—all the way to geopolitics. Wars are already fought on screens manned by geeks and weapons and weapon platforms are being AI enabled. The first and last war to win is that of manipulation. Surveillance and deceit. And the place to begin is the data, humanly incalculable amounts of which are fed to AI systems. Democratic and plural societies are naturally at a disadvantage here.

So, what does national sovereignty after the advent of AI look like? It is, first of all, about managing the data. Sovereign AI means not only states helping build their own algorithms and AI infrastructure but also building these to comply with the state's own data sovereignty. This is a fundamental regulatory requirement to ensure all sensitive data processed and stored by AI systems remain within a state's legal jurisdiction. Sovereign AI is subsumed under the broader concept of digital sovereignty which encompasses national security, economic growth and politics. Sensitive data can range from defence to finance or healthcare—information that must not fall into the hands of a hostile power, state or non-state.

Sovereign AI is not just about control over the data and the systems but also the ability to create AI technology and functions that extend the national interest. The release of India's open-source national AI stack aimed at reducing our dependence on Silicon Valley and all foreign platforms is a welcome development. While this is about resilience and self-reliance, it is also about catering to India's specific needs and aligning present and future development of AI systems to the stated objectives of the national strategy for AI, which in sum is: AI for All. This strategy aims at "enhancing and empowering human capabilities to address the challenges of access, affordability, shortage and inconsistency of skilled expertise; effective implementation of AI initiatives to evolve scalable solutions for emerging economies" (Amitabh Kant, former CEO, NITI Aayog).

The national AI stack's emphasis is on adaptability and flexibility, such as the ability to operate under low bandwidth. Language models, developer toolkits, APIs, training modules, etc are all included in a free-flowing but engineered experiment where one player can build on the creation of another. It will take AI to communities with hitherto limited digital access and experience.

In theory it is the very definition of Sovereign AI; in practice it can revolutionise how the world looks at AI. But the price will be constant vigilance.

Regulatory compliance and data protection are only the first and second of the many advantages of Sovereign AI although these are the primary ones. India's ambitions are a classic case of the need for and uses of market-specific AI solutions and, by extension, these can create more jobs by building an industry with technological innovations and ultimately speed up economic growth.

As a matter of fact, while there is so much concern worldwide about AI taking away people's jobs, little is said about the number and kinds of jobs AI will create. One reason for that, of course, is a willing blindness to the fact that behind the machine there will still be a woman or a man, for a long time yet.

Nevertheless, the next and last rung of the ladder is national security. If a state can secure its data and the systems that run on that data, it can also protect all critical infrastructure, from defence to public utilities like water and electricity (or traffic lights). All such critical infrastructure can and will be targeted in a conflict and the enemy would want to take them out to cause maximum damage—a socio-economic and civic collapse, a loss of national mobility—at minimum expense.

None of this means Sovereign AI, or exerting national sovereignty over AI, is tantamount to digital isolation. Rather, it is about resilience. And a democracy has a far tougher job guarding itself against hostile action while ensuring civil liberties. It is that vulnerability of a country like India or the US that an authoritarian adversary like China seeks to exploit—the multiplicity of views, the diversity of people, the essential openness of an open society.

Therefore, it is necessary for international cooperation to build global AI standards and regulations which will manage cross-border flows of data and technology and help likeminded states work together on cybersecurity and data privacy. Sovereign AI does not mean locking up a country's AI infrastructure.

There are supposed to be three fundamental models of AI strategy. The European Union's (EU) approach is regulatory, whereby the EU has been the first and biggest global mover in framing rules and regulations for data privacy, securing digital sovereignty and enforcing ethical standards on AI development. Companies that run AI operations in the EU face the most stringent data protection requirements, with the General Data Protection Regulation (GDPR) granting individuals much more control over their personal data than anywhere else while ensuring regulatory compliance on data processed within the EU.

The second is the Chinese state-driven model where not only are private tech companies and academies locked into the state ecosystem but everything is integrated in an attempt to, on the one hand, make China the most powerful AI player in the world by 2030 and, on the other, exert full control over the population. Surveillance is the obverse of China's cutting-edge AI prowess in both of which Beijing has invested billions. Unsurprisingly, in such a system defined by its Great Firewall, the state has total control over the data generated in its jurisdiction.

The third way is the American. The native land of free-market innovation, the American objective is to maintain its technological superiority and global leadership and thereby ensure national security. Helmed by Big Tech, the state guides and invests in what is essentially an effort led by the private sector to constantly innovate and advance AI systems. The state has another role: it is responsible for national cybersecurity and protection of critical infrastructure. Just as the Defense Advanced Research Projects Agency (DARPA) is a big investor in AI R&D, an entity like the Cybersecurity and Infrastructure Security Agency (CISA) is tasked with keeping America safe from AI-enabled threats, which is not the same as fears about AI itself. Unlike the Chinese top-down state-run model, this is a genuine private-public partnership optimising the strengths of each sector.

India has been a late starter but better late than never. It is nowhere near the US or China in terms of cutting-edge AI development but its challenges are also unique. It has put itself on the global AI map, has a vision and, one might say, an AI philosophy that means well. The question is not whether it will work out but how soon. One might also stick one's neck out and proclaim that the Indian approach could well be a fourth way where data protection, innovation, public good and national security and harmonised seamlessly. There is not much that may be very original here but for the important factor of critical balance. And that is enough.

THERE IS A lot that is happening in India. The state is actively helping build an AI ecosystem that is also affordable and accessible. Initiatives like the IndiaAI Mission and the Centres of Excellence for AI are not only democratising AI but also breaking the hold of global tech giants. The availability of GPUs (graphics processing units), research facilities and computing power is improving. The government has allocated more than `10,300 crore over five years for AI R&D and infrastructure. A key focus of this mission, according to the Ministry of Electronics and Information Technology (MeitY), is "the development of a high-end common computing facility equipped with 18,693 Graphics Processing Units (GPUs), making it one of the most extensive AI compute infrastructures globally. This capacity is nearly nine times that of the open-source AI model DeepSeek and about two-thirds of what ChatGPT operates on."

India ranks No 1 in global AI skill penetration with a score of 2.8, beating the US (2.2), according to the Stanford AI Index 2024. India's AI talent concentration is also up by 263 per cent over the last decade, as per the data. Given India's multiplicity of everything, the scale always needed to be different and foundational AI models, such as our Large Language Models (LLMs) and Small Language Models (SLMs), had to be developed accordingly. High-quality and anonymous/ non-personal datasets have thus been made available to AI startups as well as the world's first open GPU market. The foundation and structure of India's AI mission are thus democratic, ensuring as level a playing field as possible.

Where the state will again have a big role to play concerns the original price of this harmonising act: eternal vigilance. Openness, access and affordability, naturally, do not make security any better. Everybody will have to keep their eyes wide open, all the time. Because the line between algorithm and human, between machine and mind, has already been blurred. Because national sovereignty will be secured or lost or lost and won again at the altar of AI. The future is the present. The odds are against democratic societies. They have to work twice as hard to stay safe.

GoLaxy is only the advance guard of the perfect storm that is coming. Perhaps India will be an AI powerhouse by August 15, 2047. But the world must still be a place where August 15 means what it does.