The Class of Tomorrow

PUNE-BASED ENGLISH professor Kunal Ray says he does not grade his students based on exams alone, but over how they "reflect" what they have learnt. He promotes what he calls intensely participatory classes and therefore has no apprehensions about anyone else, least of all a robot, substituting him as a teacher, especially in the humanities. Not to date with such Artificial Intelligence (AI) language models that pick up data that is already available, argues Ray, who teaches courses in literary and cultural studies at FLAME University. This is why he thinks that ChatGPT, the AI tool from the US-based company OpenAI that helps in searches as well as in writing poetry, essays, and exams, won't make his role irrelevant or less crucial than before it was launched in November last year. Ashoka University academic, translator, and columnist Rita Kothari agrees and sees ChatGPT or its rival from Google, Bard, which is yet to be made available for public use, as helpful to students in doing things that are routine and in completing assignments that do not require much creative thinking. Kothari, a multilingual scholar who has previously taught at St Xavier's College (Ahmedabad), MICA, and the Indian Institute of Technology, Gandhinagar, tells Open, "What is seen as competence [understanding of certain subjects that are already in the public domain and that which only needs elaboration] can be replaced or made redundant. But thinking, reflection, and experience are still the preserve of the human."

This means these two academics do not see any problem ceding the part of their curricula that students can pick up from AI tools because they think they will be left with the core functions they do—teaching based on critical thinking and experience. ChatGPT and the sort will liberate them from having to reinvent the wheel, things that are already there for anyone to learn, goes their line of thought.

This is certainly an argument among academics who exude confidence that these AI tools will only complement their role, not excel them, or replace them. But some are worried, not in fact about themselves, but about the future of learning across segments. We will come to them later. As of now, many academic institutions as well as governments are keen to make use of AI technologies for what they see as huge gains in the democratisation of information and knowledge. The government of India, according to reports, is determined to tap ChatGPT to the fullest to do innovative things, including dissemination of information in regional languages to groups such as farmers who, if the government's plans succeed, can avail of services of chatbots to know more about government schemes. According to a news report, a group christened Bhashini—and attached to the Ministry of Electronics and IT (MeitY)—is developing a ChatGPT-powered WhatsApp chatbot to fetch farmers information about government programmes useful to them at their fingertips (meaning on WhatsApp). Wait. The Indian ministry is not alone in making the most of this opportunity. According to a report in Business Insider, Institut auf dem Rosenberg—one of the world's most expensive schools—feels that it is hypocritical to ask its students not to use AI products. The report quotes Anita Gademann, the school's director and head of innovation, as saying that she had been preparing for AI five years earlier. It adds, "Gademann says students are taught to use AI 'as a tool', and she's particularly excited by text-to-image generators like DALL·E. Seventh-graders used it for a history project to visualise the Middle Ages and the 'discrepancies between nobility and peasants'. Another student used DALL·E to generate pictures in an essay about the role of women in the First World War." DALL·E creates videos, images, and sounds based on commands, and has left the creative world in a tizzy. From fantastical works of art to instructive videos to even magazine covers, the image-generating tool is all set to revolutionise the way humans create and consume art and design and has been hailed and criticised alike for its powers—from celebrations of beauty to the dangers of fake imagery. There are more such reports about AI enthusiasts who are kicked about how new technologies can aid education. Like them, many policymakers, too, feel that there isn't much of a sense to be anxious about new technologies because all through history there has been panic and high doses of initial scepticism, be it about calculators, computers, and the internet, whether or not they are used by the students or the rest of the public. They are of the view that alongside whatever disruptions AI makes, there are gains as well, especially in making life less cumbersome. You no longer have to count tediously and the machines will do it for you instead. Nor do you have to remember spellings because they are autocorrected as you write or speak thanks to software, and you don't have to remember phone numbers and addresses or write them down in notebooks, they contend. The key point they make is that technologies leave you more time to be focused on creative aspects of learning.

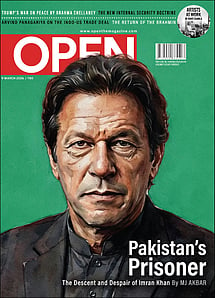

Imran Khan: Pakistan’s Prisoner

27 Feb 2026 - Vol 04 | Issue 60

The descent and despair of Imran Khan

They repeatedly assert that the march of technology cannot be stopped. Certain organisations insist, however, that governments will have to step in to help people who are potential victims of loss of livelihoods thanks to AI in general. Bharatiya Mazdoor Sangh (BMS), the trade union arm of the Rashtriya Swayamsevak Sangh (RSS), the parent body of the ruling BJP, has called for putting in place regulations to check AI's misuse that typically includes impersonation (some products can imitate voices) and a raft of other problems. Deep fake is what is often talked about when people dwell on AI and its adverse impact. BMS recently issued a statement cautioning the Indian government regarding the usage of AI. "The government should also envision and establish some regulatory mechanism for Artificial Intelligence. It must specify areas where it can be used and where it should not be used," it said. There is, of course, no shortage of people who seek government protection for people whose jobs may be in jeopardy. That such people form a large section of people is more or less a valid argument.

COMING BACK TO ChatGPT, there are those within and outside of academia who feel that all is not well with such AI tools. They argue that there is more to such offerings than meets the eye, as some studies categorically suggest. Some of them are not only reviewing but also exploring ways to overcome hurdles thrown up by them—which includes helping students clear even the toughest of exams. In a paper titled 'Would ChatGPT Get a Wharton MBA? A Prediction Based on Its Performance in the Operations Management Course', Christian Terwiesch, a professor at the Wharton School, wrote, "The purpose of this paper is to document how ChatGPT performed on the final exam of a typical MBA core course, Operations Management." He adds, "Finally, ChatGPT is remarkably good at modifying its answers in response to human hints. In other words, in the instances where it initially failed to match the problem with the right solution method, ChatGPT was able to correct itself after receiving an appropriate hint from a human expert. Considering this performance, ChatGPT would have received a B to B- grade on the exam. This has important implications for business school education, including the need for exam policies, curriculum design focusing on collaboration between human and AI, opportunities to simulate real-world decision-making processes, the need to teach creative problem solving, improved teaching productivity, and more." Interestingly, ChatGPT is certain to get more traction thanks to its collaboration with Microsoft, which has announced that it will integrate ChatGPT's "underlying technology into its Edge browser and Bing search to let users get results and online material with the help of AI".

Acclaimed philosopher, linguist, and public intellectual Noam Chomsky offers a pithy response to a query from Open about the incentives that students will have for writing their theses and assignments, now that there are AI tools to help them write essays and dissertations. "If a course doesn't provide students incentives [to be honest], they shouldn't be taking it," he says, emphasising that what Sam Altman-headed OpenAI, which was founded and financed by billionaire Elon Musk, is promoting is hi-tech plagiarism. Some technology experts, meanwhile, aver that we will soon have detection software in place to verify whether an exam or an essay was written using ChatGPT or similar offerings, or not. "If a student is found to have written a paper using any of these tools, he or she will find it very embarrassing. That will be a huge deterrent, in my opinion," says a senior executive with a technology company who didn't wish to be named because he is not authorised to speak to the media.

FOR HIS PART, noted US-based entrepreneur-turned-academic and author Vivek Wadhwa says that the incentives for students now will be to learn and use these AI tools because they will do all the homework. "Who wants to do boring writing and research when an AI can do all of the work for them?" he asks.

Chomsky notes that the challenges before professors and universities in this age of AI to get students to learn on their own and not fall back on new AI tools are "serious and challenging courses". Renowned Israeli-American behavioural economist Dan Ariely has some stimulating thoughts on how to inspire students to take their learning seriously and be creative—so as to keep them away from AI-aided shortcuts. Ariely, James B Duke Professor of Psychology and Behavioural Economics at Duke University, tells Open that a crucial part of the incentive for students to love the process of learning is to offer intrinsic motivation. "This is true for lots of things. What is the motivation for people to learn and improve, not everything is about external rewards and ease of doing things. People write poetry. People learn for the joy of learning. So, I think that there will be intrinsic motivation for them. And universities will have to [definitely] also do extrinsic motivation. They will have to think about how to create a moral code." Universities will also have to have certain guardrails that include punishments to check the rampant use of AI to skip learning. The logic here is to regulate the excessive use of AI for the successful completion of a course in the wake of reports that AI can be used to pass even law, business, and medical school exams.

Wadhwa, who is also a well-known speaker and columnist, proposes that professors and universities need to rapidly learn these tools as well so they can understand what has changed. "And then they need to rethink how they teach and test students. So far, academia has been slow to respond to technology changes but they cannot ignore this disruption," he tells Open.

Parallel to all this, there are also concerns about the outcome of efforts by companies like the one owned by Elon Musk—Neuralink—which, in the long run, wants to implant chips in the brain to make humans do superhuman work. In fact, in 2021, the company showed a macaque playing a simple video game after being implanted with a brain chip. To a question on what all this means for learning, Chomsky tells Open, "It's too remote, if even possible at all, to pay much attention to, in my opinion." Wadhwa says he doesn't believe in any of Elon Musk's technological promises. "Just as he has promised for six years that the Tesla FSD will work and the cars will soon drive themselves, Neuralink is still science fiction. There will be breakthroughs on this front but not for another 10-20 years," says this Silicon Valley-based alumnus of New York University. For their part, senior executives at Google and Microsoft didn't respond to queries from Open.

Many experts in education and languages who spoke with Open argue that the best way to tackle cheating through technology is technology. This is why many of them bank on potential new technologies that will call out students for hi-tech plagiarism. That these AI tools aren't always good with facts—that they get confused with distinguishing between a book's author and its reviewer in some cases, for instance—make them less appealing than they appear. And yet, the fear of AI, especially new technologies in the segment, persists.