How to Preempt Responsibility

THIS WEEK, Sam Altman, the founder and CEO of OpenAI, which launched the artificial intelligence (AI) revolution after its release of ChatGPT that answers like a human for every question you throw at it, came before the US Congress and said that his company and others like it in the field needed to be regulated because great danger is imminent. Time magazine, reporting on it, said: "And while he said he is ultimately optimistic that innovation will benefit people on a grand scale, Altman echoed his previous assertion that lawmakers should create parameters for AI creators to avoid causing 'significant harm to the world.' 'We think it can be a printing press moment,' Altman said. 'We have to work together to make it so.'"

This is a little unusual. Imagine Mark Zuckerberg launching Facebook and then asking for it to be regulated. He didn't do it because regulation is government interference, and that is, more often than not, bad for business. In any case, it will happen eventually, but usually exploitation or distress felt by consumers lead to it. With AI, the parties demanding it enthusiastically are the very ones at the helm of it. Sometime back, Elon Musk, the owner of Tesla and Twitter, asked for a six-month pause on all AI development. In about five years, he will probably be one of the leaders in this arena. But do these pioneering entrepreneurs really care about the fallout of what they are doing? Or are they more concerned with preempting the responsibility that will come with the dangers that this technology unleashes?

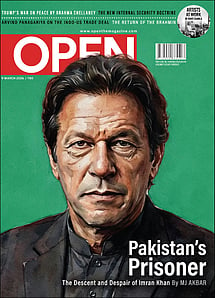

Imran Khan: Pakistan’s Prisoner

27 Feb 2026 - Vol 04 | Issue 60

The descent and despair of Imran Khan

For example, ChatGPT itself is notoriously unreliable with facts. It just makes it up when it doesn't have an answer to a question. This wasn't something the company informed users when it first let it loose, and the public learnt it the hard way through their own experience. ChatGPT also turned out to be politically biased, leaning liberal and leftwards, and they didn't make any disclaimers of that either. In later versions, this is being corrected but if they had been working on this product for years, why wouldn't they know about the bias? They can't foresee everything, but at least what they already know could be addressed. Why ask the government to regulate when they could do it themselves? Or take Musk's desire for a moratorium on AI research. It sounds altruistic until you consider that he has to play catch-up to others like OpenAI and Google in this arena. It is good for him if time stops for six months.

Maybe, there is a genuine desire to negotiate this new technology that is certain to change the world in unforeseen ways. But less regulation is still often better at an initial stage, like in the case of the internet itself or social media. A lot of evil, too, entered with them but were met as they came. And more regulation in the beginning would only have stunted the growth of the technologies. Anticipation of all dangers is just giving the government too much power, and why would that not be open to abuse too?